| Who is afraid of Artificial

Intelligence? And why? An explanation of this image that I posted on Twitter here. 7 April 2023 |

“Mommy!

I'm afraid of AI.” |

In March 2023, this

was one of many news reports about Elon Musk and other "prominent"

computer

scientists and other "tech

industry notables" endorsing this

document from the Future

of Life Institute.

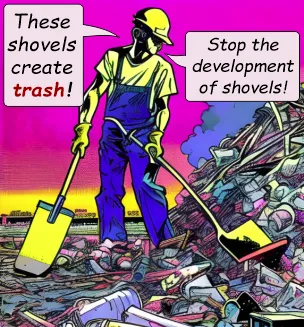

The document advocates a 6 month pause in the development of artificial intelligence so that "we" can "consider the risks", and if the AI businesses refuse, the government should stop them.

The document advocates a 6 month pause in the development of artificial intelligence so that "we" can "consider the risks", and if the AI businesses refuse, the government should stop them.

What are the risks of AI?

The document claims that the AI

software "can

pose profound risks to society and humanity” by (1) creating

disinformation, and (2) eliminating some jobs.

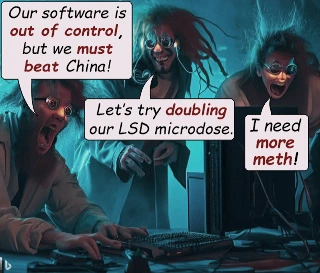

Two other concerns are that the AI businesses are (3) "locked in an out-of-control race" to improve software that (4) "no one – not even their creators – can understand, predict, or reliably control."

Two other concerns are that the AI businesses are (3) "locked in an out-of-control race" to improve software that (4) "no one – not even their creators – can understand, predict, or reliably control."

Be suspicious of secretive heroes

Elon Musk endorsed that document, but who wrote it? And who is the "we"

that will consider the risks and make the decisions

about what to do about AI?

It is dangerous to follow secretive people. A person who is truly talented will be proud of himself, and we will be impressed with his achievements. He will not be ashamed of himself, or have any reason to hide from us. This is true regardless of whether his talent is in athletics, music, chemistry, carpentry, farming, or AI software.

It is dangerous to follow secretive people. A person who is truly talented will be proud of himself, and we will be impressed with his achievements. He will not be ashamed of himself, or have any reason to hide from us. This is true regardless of whether his talent is in athletics, music, chemistry, carpentry, farming, or AI software.

Although people often need to be

secretive while they investigate a crime, the "experts" who claim to

be providing us with intelligent

analyses or advice need secrecy only

if they are trying to manipulate, deceive, exploit, and abuse us.

Be suspicious of endorsements

One method of increasing the

popularity of something is to provide an intelligent

explanation of how it is beneficial.

Unfortunately, the majority of people don't have much of an interest in

thinking.

Therefore, the most common method is to use emotional

stimulation, such as advertisements, brochures, and videos that

titillate us with unrealistic

promises, or with images of babies, sexually attractive people,

or

people enjoying

the product.

Another method is "celebrity branding", in which a famous person endorses the item. This method is so popular that there are businesses, such as The CShop, that help organizations arrange for celebrity endorsements.

A variation of celebrity branding is to deceive people into believing that a celebrity created the item, or is a top official in the organization that created the item (an example is Kylie Krisprs).

There are more than

1,500,000 nonprofit

organizations in the USA, and many of

them convince wealthy and famous people to donate money to them or

provide them with credibility.

There are more than

1,500,000 nonprofit

organizations in the USA, and many of

them convince wealthy and famous people to donate money to them or

provide them with credibility.

If the celebrities were truly providing us with intelligent advice, then their endorsements would be beneficial, but if they are pushed or paid to endorse a product that they don't know much about, then they are analogous to a worm on a fishing hook. Is the Future of Life Institute using Elon Musk as "fishing bait"?

The advertisements that are designed for scientists contain a lot of intelligent information, but the advertisements designed for the typical person are emotional titillation. The advertisements for children are even less intelligent.

Therefore, when a group of "prominent scientists" and other "experts" use emotional titillation to promote complex concepts that most people are ignorant about, we ought to wonder why they are promoting such ideas to the public, who don't understand the issue, rather than to other "prominent scientists" and "experts" who would be able to understand their brilliant ideas.

This should be considered as evidence that they don't have anything intelligent to say, so they are trying to get the support of the public. This could be described as "scraping the bottom of the barrel" for support.

|

Another method is "celebrity branding", in which a famous person endorses the item. This method is so popular that there are businesses, such as The CShop, that help organizations arrange for celebrity endorsements.

A variation of celebrity branding is to deceive people into believing that a celebrity created the item, or is a top official in the organization that created the item (an example is Kylie Krisprs).

There are more than

1,500,000 nonprofit

organizations in the USA, and many of

them convince wealthy and famous people to donate money to them or

provide them with credibility.

There are more than

1,500,000 nonprofit

organizations in the USA, and many of

them convince wealthy and famous people to donate money to them or

provide them with credibility.If the celebrities were truly providing us with intelligent advice, then their endorsements would be beneficial, but if they are pushed or paid to endorse a product that they don't know much about, then they are analogous to a worm on a fishing hook. Is the Future of Life Institute using Elon Musk as "fishing bait"?

The advertisements that are designed for scientists contain a lot of intelligent information, but the advertisements designed for the typical person are emotional titillation. The advertisements for children are even less intelligent.

Therefore, when a group of "prominent scientists" and other "experts" use emotional titillation to promote complex concepts that most people are ignorant about, we ought to wonder why they are promoting such ideas to the public, who don't understand the issue, rather than to other "prominent scientists" and "experts" who would be able to understand their brilliant ideas.

This should be considered as evidence that they don't have anything intelligent to say, so they are trying to get the support of the public. This could be described as "scraping the bottom of the barrel" for support.

They are fearmongers, not leaders

The secretive people who wrote

that document don't provide an explanation for how AI software can harm

us, or how pausing the development of the software will help us.

We don't learn anything about AI software from their document because they are not providing an intelligent analysis of the issue. Instead, they have adjectives and phrases that are intended to stimulate our emotion of fear.

My

conclusion is that they

are not

trying to help us to understand the risks of AI software.

Rather, they are trying to manipulate the

public

with fear into allowing them to get control of the

software. That will allow them to modify the software to give

them the results they want, while fooling us into believing that the

modifications are protecting us.

My

conclusion is that they

are not

trying to help us to understand the risks of AI software.

Rather, they are trying to manipulate the

public

with fear into allowing them to get control of the

software. That will allow them to modify the software to give

them the results they want, while fooling us into believing that the

modifications are protecting us.

To understand my accusation, here is my analysis of their four concerns mentioned above.

We don't learn anything about AI software from their document because they are not providing an intelligent analysis of the issue. Instead, they have adjectives and phrases that are intended to stimulate our emotion of fear.

My

conclusion is that they

are not

trying to help us to understand the risks of AI software.

Rather, they are trying to manipulate the

public

with fear into allowing them to get control of the

software. That will allow them to modify the software to give

them the results they want, while fooling us into believing that the

modifications are protecting us.

My

conclusion is that they

are not

trying to help us to understand the risks of AI software.

Rather, they are trying to manipulate the

public

with fear into allowing them to get control of the

software. That will allow them to modify the software to give

them the results they want, while fooling us into believing that the

modifications are protecting us.To understand my accusation, here is my analysis of their four concerns mentioned above.

The

Internet freed us from being

dependent upon a few media businesses for information about the world.

The

Internet freed us from being

dependent upon a few media businesses for information about the world.